MALib: A parallel framework for population-based multi-agent reinforcement learning

Published in Mar. 2nd, 2023

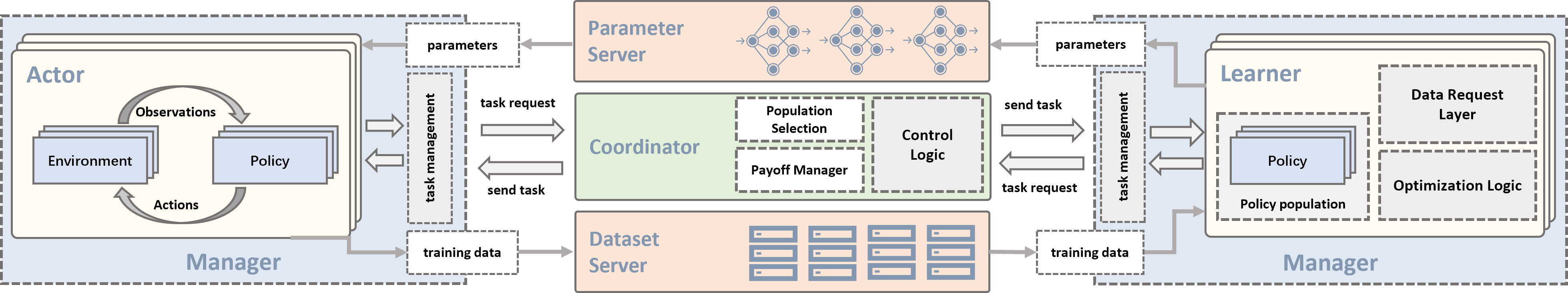

Population-based multi-agent reinforcement learning (PB-MARL) refers to a series of methods combining dynamical population selection methodologies and multi-agent reinforcement learning (MARL) algorithms. Although PB-MARL has achieved impressive successes in some nontrivial multi-agent tasks, it suffers from low computational efficiency in sequential execution due to the heterogeneity in computing patterns and policy combinations. We argue for dispatching PB-MARL’s subroutines via the combination of a stateless central task dispatcher and stateful workers, thereby leveraging parallelism at different components to solve this problem efficiently. This paper follows this principle and presents MALib – a parallel framework that comprises a task control model, independent data servers and abstraction of MARL training paradigms. The source code is available at sjtu-marl/malib.

Bibtex

@article{JMLR:v24:22-0169,

author = {Ming Zhou and Ziyu Wan and Hanjing Wang and Muning Wen and Runzhe Wu and Ying Wen and Yaodong Yang and Yong Yu and Jun Wang and Weinan Zhang},

title = {MALib: A Parallel Framework for Population-based Multi-agent Reinforcement Learning},

journal = {Journal of Machine Learning Research},

year = {2023},

volume = {24},

number = {150},

pages = {1--12},

url = {http://jmlr.org/papers/v24/22-0169.html}

}